Neural Networks

Logistic Regression

Let be a vector representing an input instance, where denotes the 'th feature of the input and be its corresponding output label. Logistic regression uses the logistic function, aka. the sigmoid function, to estimate the probability that belongs to :

The weight vector assigns weights to each dimension of the input vector for the label such that a higher magnitude of weight indicates greater importance of the feature . Finally, represents the bias of the label within the training distribution.

Q7: What role does the sigmoid function play in the logistic regression model?

Consider a corpus consisting of two sentences:

D1: I love this movie

D2: I hate this movie

The input vectors and can be created for these two sentences using the bag-of-words model:

V = {0: "I", 1: "love", 2: "hate", 3: "this", 4: "movie"}

x1 = [1, 1, 0, 1, 1]

x2 = [1, 0, 1, 1, 1]

Let and be the output labels of and , representing postive and negative sentiments of the input sentences, respectively. Then, a weight vector can be trained using logistic regression:

w = [0.0, 1.5, -1.5, 0.0, 0.0]

b = 0

Since the terms "I", "this", and "movie" appear with equal frequency across both labels, their weights , , and are neutralized. On the other hand, the terms "love" and "hate" appear only with the positive and negative labels, respectively. Therefore, while the weight for "love" () contributes positively to the label , the weight for "hate" () has a negative impact on the label . Furthermore, as positive and negative sentiment labels are equally presented in this corpus, the bias is also set to 0.

Given the weight vector and the bias, we have and , resulting the following probabilities:

As the probability of being exceeds (50%), the model predicts the first sentence to convey a positive sentiment. Conversely, the model predicts the second sentence to convey a negative sentiment as its probability of being is below 50%.

Q8: Under what circumstances would the bias be negative in the above example? Additionally, when might neutral terms such as "this" or "movie" exhibit non-neutral weights?

Softmax Regression

Softmax regression, aka. multinomial logistic regression, is an extension of logistic regression to handle classification problems with more than two classes. Given an input vector and its output lable , the model uses the softmax function to estimates the probability that belongs to each class separately:

The weight vector assigns weights to for the label , while represents the bias associated with the label .

Q9: What is the role of the softmax function in the softmax regression model? How does it differ from the sigmoid function?

Consider a corpus consisting of three sentences:

D1: I love this movie

D2: I hate this movie

D3: I watched this movie

Then, the input vectors , , and for the sentences can be created using the bag-of-words model:

V = {0: "I", 1: "love", 2: "hate", 3: "this", 4: "movie", 5: "watched"}

x1 = [1, 1, 0, 1, 1, 0]

x2 = [1, 0, 1, 1, 1, 0]

x3 = [1, 0, 0, 1, 1, 1]

Let , , and be the output labels of , , and , representing postive, negative, and neutral sentiments of the input sentences, respectively. Then, weight vectors , , and can be trained using softmax regression as follows:

w1 = [0.0, 1.5, -1.0, 0.0, 0.0, 0.0]

w2 = [0.0, -1.0, 1.5, 0.0, 0.0, 0.0]

w3 = [0.0, -1.0, -1.0, 0.0, 0.0, 1.5]

b1 = b2 = b3 = 0

Unlike the case of logistic regression where all weights are oriented to (both and giving positive and negative weights to respectively, but not ), the values in each weigh vector are oriented to each corresponding label.

Given the weight vectors and the biases, we can estimate the following probabilities for :

Since the probabiilty of is the highest among all labels, the model predicts the first sentence to convey a positive sentiment. For , the following probabilities can be estimated:

Since the probabiilty of is the highest among all labels, the model predicts the first sentence to convey a neutral sentiment.

Softmax regression always predicts values so that it is represented by an output vector , wherein the 'th value in contains the probability of the input belonging to the 'th class. Similarly, the weight vectors for all labels can be stacked into a weight matrix , where the 'th row represents the weight vector for the 'th label.

With this new formulation, softmax regression can be defined as , and the optimal prediction can be achieved as , which returns a set of labels with the highest probabilities.

What are the limitations of the softmax regression model?

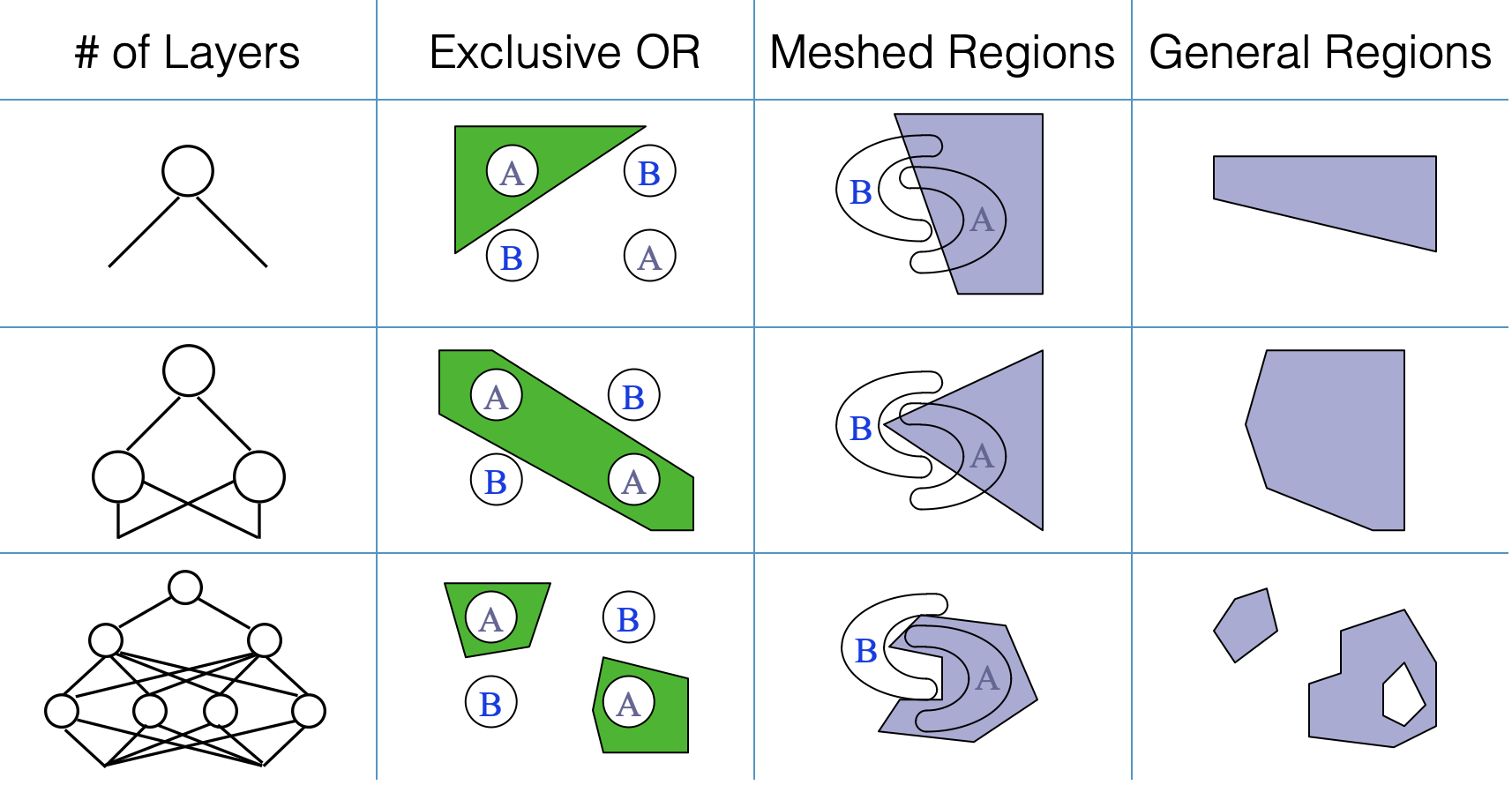

Multilayer Perceptron

A multilayer perceptron (MLP) is a type of Feedforward Neural Networks consisting of multiple layers of neurons, where all neurons from one layer are fully connected to all neurons in its adjecent layers. Given an input vector and an output vector , the model allows zero to many hidden layers to generate intermediate representations of the input.

Let be a hidden layer between and . To connect and , we need a weight matrix such that , where is an activation function applied to the output of each neuron; it introduces non-linearity into the network, allowing it to learn complex patterns and relationships in the data. Activation functions determine whether a neuron should be activated or not, implying whether or not the neuron's output should be passed on to the next layer.

Similarly, to connect and , we need a weight matrix such that . Thus, a multilayer perceptron with one hidden layer can be represented as:

Q10: Notice that the above equation for MLP does not include bias terms. How are biases handled in light of this formulation?

Consider a corpus comprising the following five sentences the corresponding labels ():

D1: I love this movie postive

D2: I hate this movie negative

D3: I watched this movie neutral

D4: I truly love this movie very positive

D5: I truly hate this movie very negative

The input vectors can be created using the bag-of-words model:

X = {0: "I", 1: "love", 2: "hate", 3: "this", 4: "movie", 5: "watched", 6: "truly"}

Y = {0: "positive", 1: "negative", 2: "neutral", 3: "very positive", 4: "very negative"}

x1 = [1, 1, 0, 1, 1, 0, 0]

x2 = [1, 0, 1, 1, 1, 0, 0]

x3 = [1, 0, 0, 1, 1, 1, 0]

x4 = [1, 1, 0, 1, 1, 0, 1]

x5 = [1, 0, 1, 1, 1, 0, 1]

y1, y2, y3, y4, y5 = 0, 1, 2, 3, 4

Q11: What would be the weight assigned to the feature "truly" learned by softmax regression for the above example?

The first weight matrix can be trained by an MLP as follows:

Wx = [

[0.0, 0.0, 0.0, 0.0, 0.0],

[1.0, 0.0, 0.0, 0.5, 0.0],

[0.0, 1.0, 0.0, 0.0, 0.5],

[0.0, 0.0, 0.0, 0.0, 0.0],

[0.0, 0.0, 0.0, 0.0, 0.0],

[0.0, 0.0, 1.0, 0.0, 0.0],

[0.0, 0.0, 0.0, 0.5, 0.5]

]

Given the values in , we can infer that the first, second, and third columns represent "love", 'hate", and "watch", while the fourth and fifth columns learn combined features such as _love_ and _hate_, respectively.

Each of is multiplied by to achieve the hiddner layer , respectively, where the activation function is designed as follow:

g1 = [1.0, 0.0, 0.0, 0.5, 0.0]

g2 = [0.0, 1.0, 0.0, 0.0, 0.5]

g3 = [0.0, 0.0, 1.0, 0.0, 0.0]

g4 = [1.0, 0.0, 0.0, 1.0, 0.5]

g5 = [0.0, 1.0, 0.0, 0.5, 1.0]

h1 = activation(g1) = [1.0, 0.0, 0.0, 0.0, 0.0]

h2 = activation(g2) = [0.0, 1.0, 0.0, 0.0, 0.0]

h3 = activation(g3) = [0.0, 0.0, 1.0, 0.0, 0.0]

h4 = activation(g4) = [1.0, 0.0, 0.0, 1.0, 0.0]

h5 = activation(g5) = [0.0, 1.0, 0.0, 0.0, 1.0]

The second weight matrix can also be trained by an MLP as follows:

Wh = [

[ 1.0, -1.0, 0.0, -0.5, -1.0],

[-1.0, 1.0, 0.0, -1.0, -0.5],

[-1.0, -1.0, 1.0, -1.0, -1.0],

[ 0.0, -1.0, 0.0, 1.0, -1.0],

[-1.0, 0.0, 0.0, -1.0, 1.0]

]

By applying the softmax function to each , we achieve the corresponding output vector :

o1 = [ 1.0, -1.0, -1.0, 0.0, -1.0]

o2 = [-1.0, 1.0, -1.0, -1.0, 0.0]

o3 = [ 0.0, 0.0, 1.0, 0.0, 0.0]

o4 = [ 0.5, -2.0, -2.0, 1.0, -2.0]

o5 = [-2.0, 0.5, -2.0, -2.0, 1.0]

y1 = softmax(o1) = [0.56, 0.08, 0.08, 0.21, 0.08]

y2 = softmax(o2) = [0.08, 0.56, 0.08, 0.08, 0.21]

y3 = softmax(o3) = [0.15, 0.15, 0.40, 0.15, 0.15]

y4 = softmax(o4) = [0.35, 0.03, 0.03, 0.57, 0.03]

y5 = softmax(o5) = [0.03, 0.35, 0.03, 0.03, 0.57]

The prediction can be made by taking the argmax of each .

Q12: What are the limitations of a multilayer perceptron?

References

- Neural Network Methodologies and their Potential Application to Cloud Pattern Recognition, J. E. Peak, Defense Technical Information Center, ADA239214, 1991.